海康威视摄像头完成实时预览功能和抓拍功能 背景思路、流程开发步骤1.海康的SDK,只需要在项目启动的时候初始化一次就行,所以我直接将初始化SDK和加载DLL库的代码丢到启动类中去了:2.先讲实时预览功能,我是直接通过RTSP协议取流

最近在新系统的研发中负责了视频监控模块的开发,项目监控设备全部采用海康的摄像头,枪机、球机都有,开发的过程中,有个需求是在前端页面上把摄像头画面进行平铺展示,最开始的方案是通过官方api完成,但是后面发现项目上所有的设备都是不联网的,所以只能转由SDK进行二次开发,这里使用的语言是JAVA,框架用的是SpringCloud。

关于大致的SDK调用流程、百度一大堆就不细说了,总结下来分成以下几小步:

1.加载dll函数库

2.初始化SDK:NET_DVR_Init();

3.设置摄像头IP、PORT、USERNAME、PWD信息进行设备登录操作:NET_DVR_Login_V40();

4.登陆成功后即可开始进行需要的操作,我的就是实时预览、抓拍。

5.操作完成后将刚才登录的设备注销:NET_DVR_LoGout();

6.SDK使用完毕后,释放SDK资源:NET_DVR_Cleanup();

7.下班收工

根据上面的流程我依次贴出我的代码,至于如何成功导入SDK到项目,官网和SDK里都有说的,实在不行csdn也有很多教学。

@EnableCustomConfig@EnableCustomswagger2@EnableRyFeignClients@EnableConfigurationProperties@SpringBootApplication(exclude = {DataSourceAutoConfiguration.class})@Slf4jpublic class Start_monitor_9500 { public static void main(String[] args) { springApplication.run(Start_monitor_9500.class, args); } @PostConstruct public void initSDK() { new HCUtils().beforeInit(); }}

举例说明:通道01主码流:rtsp://admin:test1234@172.6.22.106:554/h264/ch01/main/av_stream通道01子码流:rtsp://admin:test1234@172.6.22.106:554/h264/ch01/sub/av_stream通道01第3码流:rtsp://admin:test1234@172.6.22.106:554/h264/ch01/stream3/av_streamIP通道01的主码流:rtsp://admin:test1234@172.6.22.106:554/h264/ch33/main/av_streamIP通道01的子码流:rtsp://admin:test1234@172.6.22.106:554/h264/ch33/sub/av_stream零通道主码流(零通道无子码流):rtsp://admin:test1234@172.6.22.106:554/h264/ch0/main/av_stream 注:老版本URL,64路以下的NVR的IP通道的通道号从33开始,64路以及以上路数的NVR的IP通道的通道号从1开始。上面是老版本的url拼接规则,这里用不到SDK,直接根据你的设备信息进行字符串拼接就好。

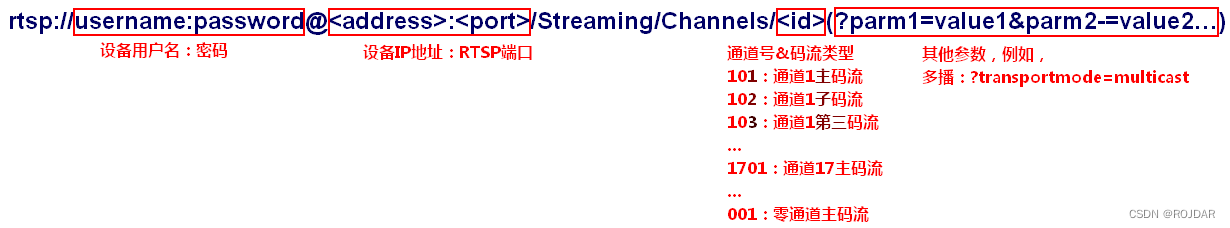

举例说明:通道01主码流:rtsp://admin:abc12345@172.6.22.234:554/Streaming/Channels/101?transportmode=unicast通道01子码流:rtsp://admin:abc12345@172.6.22.234:554/Streaming/Channels/102?transportmode=unicast(单播)rtsp://admin:abc12345@172.6.22.106:554/Streaming/Channels/102?transportmode=multicast (多播)rtsp://admin:abc12345@172.6.22.106:554/Streaming/Channels/102 (?后面可省略,默认单播)通道01第3码流:rtsp://admin:abc12345@172.6.22.234:554/Streaming/Channels/103?transportmode=unicast零通道主码流(零通道无子码流):rtsp://admin:12345@172.6.22.106:554/Streaming/Channels/001注:新版本URL,通道号全部按顺序从1开始。上面是新版本的拼接规则,至于到底是用老版本还是新版本,海康给的说法是都可以的,所以我直接用的老版本,并且我的项目中没有使用到录像机,全都是直连摄像头进行操作,所以我的通道号为01,拼完就是下面这样

rtsp://admin:CTUZB@swc123@192.168.200.192:554/h264/ch01/main/av_stream如果想验证是否拼接正确,可以下载一个VLC播放器直接填写地址点击播放看有没有画面,VLC播放器有很多,百度一搜就行。

工具类,这个很重要,我的方法都丢在这里的其中HCNetSDK是海康给出的官方的sdk资源类,成功导入SDK资源即可不会报错import cn.com.guanfang.WEB.domain.ajaxResult;import com.sun.jna.Native;import com.sun.jna.ptr.IntByReference;import lombok.extern.slf4j.Slf4j;@Slf4jpublic class HCUtils { public static HCNetSDK hCNetSDK = HCNetSDK.INSTANCE; public static int g_lVoiceHandle; static HCNetSDK.net_DVR_DEVICEINFO_V30 m_strDeviceInfo; static HCNetSDK.NET_DVR_IPPARACFG m_strIpparaCfg; static HCNetSDK.NET_DVR_CLIENTINFO m_strClientInfo; boolean bRealPlay; String m_sDeviceIP; int lPreviewHandle; IntByReference m_lPort; int lAlarmHandle; int lListenHandle; static int lUserID; int iErr = 0; public static boolean CreateSDKInstance() { if (hCNetSDK == null) { synchronized (HCNetSDK.class) { String strDllPath = ""; try { //win系统加载库路径 if (osSelect.iswindows()) { strDllPath = System.getProperty("user.dir") + "\\HCNetSDK.dll"; //linux系统加载库路径 } else if (osSelect.isLinux()) { strDllPath = "/home/hik/LinuxSDK/libhcnetsdk.so"; } hCNetSDK = (HCNetSDK) Native.loadLibrary(strDllPath, HCNetSDK.class); } catch (Exception ex) { System.out.println("loadLibrary: " + strDllPath + " Error: " + ex.getMessage()); return false; } } } return true; } public Integer Login_V40(String m_sDeviceIP, short wPort, String m_sUsername, String m_sPassWord) { // 设备登录信息 HCNetSDK.NET_DVR_USER_LOGIN_INFO m_strLoginInfo = new HCNetSDK.NET_DVR_USER_LOGIN_INFO(); // 设备信息 HCNetSDK.NET_DVR_DEVICEINFO_V40 m_strDeviceInfo = new HCNetSDK.NET_DVR_DEVICEINFO_V40(); m_strLoginInfo.sDeviceAddress = new byte[HCNetSDK.NET_DVR_DEV_ADDRESS_MAX_LEN]; System.arraycopy(m_sDeviceIP.getBytes(), 0, m_strLoginInfo.sDeviceAddress, 0, m_sDeviceIP.length()); m_strLoginInfo.wPort = wPort; m_strLoginInfo.sUserName = new byte[HCNetSDK.NET_DVR_LOGIN_USERNAME_MAX_LEN]; System.arraycopy(m_sUsername.getBytes(), 0, m_strLoginInfo.sUserName, 0, m_sUsername.length()); m_strLoginInfo.sPassword = new byte[HCNetSDK.NET_DVR_LOGIN_PASSWD_MAX_LEN]; System.arraycopy(m_sPassword.getBytes(), 0, m_strLoginInfo.sPassword, 0, m_sPassword.length()); // 是否异步登录:false- 否,true- 是 m_strLoginInfo.bUseAsynLogin = false; // write()调用后数据才写入到内存中 m_strLoginInfo.write(); lUserID = hCNetSDK.NET_DVR_Login_V40(m_strLoginInfo, m_strDeviceInfo); if (lUserID == -1) { return lUserID; } else { // read()后,结构体中才有对应的数据 m_strDeviceInfo.read(); return lUserID; } } public AjaxResult Logout() { if (lUserID >= 0) { if (hCNetSDK.NET_DVR_Logout(lUserID) == false) { return AjaxResult.error(1000, "注销失败,错误码为" + hCNetSDK.NET_DVR_GetLastError()); } return AjaxResult.success("注销成功"); } return AjaxResult.success("注销成功"); } public Integer startRealPlay(Integer lUserID) throws Exception { HCNetSDK.NET_DVR_PREVIEWINFO previewInfo = new HCNetSDK.NET_DVR_PREVIEWINFO(); previewInfo.lChannel = 1; previewInfo.dwStreamType = 0; previewInfo.dwLinkMode = 5; previewInfo.hPlayWnd = null; previewInfo.bBlocked = 0; previewInfo.bPassbackRecord = 0; previewInfo.byPreviewMode = 0; previewInfo.byProtoType = 1; previewInfo.dwDisplayBufNum = 15; previewInfo.write(); int realPlayV40 = hCNetSDK.NET_DVR_RealPlay_V40(lUserID, previewInfo, new FRealDataCallBack(), null); if (realPlayV40 < 0) { System.out.println("预览操作出错:" + hCNetSDK.NET_DVR_GetLastError()); throw new Exception(); } return realPlayV40; } private void stopRealPlay(Integer lUserID) { Boolean response = hCNetSDK.NET_DVR_StopRealPlay(lUserID); if (!response) { System.out.println("预览操作出错:" + hCNetSDK.NET_DVR_GetLastError()); } } public void init() { //SDK初始化,一个程序只需要调用一次 boolean initSuc = HCNetSDK.INSTANCE.NET_DVR_Init(); if (initSuc != true) { System.out.println("初始化失败"); } //启动SDK写日志 HCNetSDK.INSTANCE.NET_DVR_SetLogToFile(3, "..\\sdkLog\\", false); log.info("初始化sdk完成"); } public void beforeInit() { if (hCNetSDK == null) { boolean b = CreateSDKInstance(); if (!b) { log.error("SDK对象加载失败"); } } //linux系统建议调用以下接口加载组件库 if (osSelect.isLinux()) { HCNetSDK.BYTE_ARRAY ptrByteArray1 = new HCNetSDK.BYTE_ARRAY(256); HCNetSDK.BYTE_ARRAY ptrByteArray2 = new HCNetSDK.BYTE_ARRAY(256); //这里是库的绝对路径,请根据实际情况修改,注意改路径必须有访问权限 String strPath1 = System.getProperty("user.dir") + "/lib/libcrypto.so.1.1"; String strPath2 = System.getProperty("user.dir") + "/lib/libssl.so.1.1"; System.arraycopy(strPath1.getBytes(), 0, ptrByteArray1.byValue, 0, strPath1.length()); ptrByteArray1.write(); hCNetSDK.NET_DVR_SetSDKInitCfg(3, ptrByteArray1.getPointer()); System.arraycopy(strPath2.getBytes(), 0, ptrByteArray2.byValue, 0, strPath2.length()); ptrByteArray2.write(); hCNetSDK.NET_DVR_SetSDKInitCfg(4, ptrByteArray2.getPointer()); String strPathCom = System.getProperty("user.dir") + "/lib"; HCNetSDK.NET_DVR_LOCAL_SDK_PATH struComPath = new HCNetSDK.NET_DVR_LOCAL_SDK_PATH(); System.arraycopy(strPathCom.getBytes(), 0, struComPath.sPath, 0, strPathCom.length()); struComPath.write(); hCNetSDK.NET_DVR_SetSDKInitCfg(2, struComPath.getPointer()); } init(); } public AjaxResult getDVRPic(Device dvr, String imgPath) { //登录设备 Integer isLogin = Login_V40(dvr.getIp(), dvr.getPort(), dvr.getUserName(), dvr.getPassWord()); // 返回一个用户编号,同时将设备信息写入devinfo if (isLogin == -1) { log.error("抓图时摄像头登录失败,错误码:{}", hCNetSDK.NET_DVR_GetLastError()); return AjaxResult.error(1000, "抓图时摄像头登录失败,错误码:" + hCNetSDK.NET_DVR_GetLastError()); } HCNetSDK.NET_DVR_WORKSTATE_V30 devwork = new HCNetSDK.NET_DVR_WORKSTATE_V30(); //判断是否具有获取设备能力 if (!hCNetSDK.NET_DVR_GetDVRWorkState_V30(lUserID, devwork)) { log.error("抓图时摄像头返回设备状态失败,错误码:{}", hCNetSDK.NET_DVR_GetLastError()); return AjaxResult.error(1000, "抓图时摄像头返回设备状态失败,错误码:{}" + hCNetSDK.NET_DVR_GetLastError()); } HCNetSDK.NET_DVR_JPEGPARA jpeg = new HCNetSDK.NET_DVR_JPEGPARA(); //设置图片分辨率 jpeg.wPicSize = 0; //设置图片质量 jpeg.wPicQuality = 0; String path = imgPath + System.currentTimeMillis() + ".jpeg"; boolean is = hCNetSDK.NET_DVR_CaptureJPEGPicture(lUserID, 01, jpeg, path); if (!is) { log.info("抓图失败,错误码:{}", hCNetSDK.NET_DVR_GetLastError()); return AjaxResult.error(1000, "抓图失败,错误码:" + hCNetSDK.NET_DVR_GetLastError()); } //退出登录 hCNetSDK.NET_DVR_Logout(lUserID); return AjaxResult.success(path); }}第一个类:import javax.servlet.AsyncContext;import java.io.IOException;import java.time.LocalDate;public interface Converter {String geTKEy();String getUrl();void addOutputStreamEntity(String key, AsyncContext entity) throws IOException;void exit();void start();int respNum();LocalDate obtainCreatedDate();}第二个类:import com.alibaba.fastJSON.util.IOUtils;import lombok.extern.slf4j.Slf4j;import org.bytedeco.ffmpeg.avcodec.AVPacket;import org.bytedeco.ffmpeg.global.avcodec;import org.bytedeco.ffmpeg.global.avutil;import org.bytedeco.javacv.FFmpegFrameGrabber;import org.bytedeco.javacv.FFmpegFrameRecorder;import javax.servlet.AsyncContext;import java.io.ByteArrayOutputStream;import java.io.IOException;import java.time.LocalDate;import java.util.Iterator;import java.util.List;import java.util.Map;import static org.bytedeco.ffmpeg.global.avutil.AV_LOG_ERROR;@Slf4jpublic class ConverterFactories extends Thread implements Converter { public volatile boolean runing = true; private final LocalDate date = LocalDate.now(); private FFmpegFrameGrabber grabber; private FFmpegFrameRecorder recorder; private byte[] headers; private ByteArrayOutputStream stream; private String url; private List outEntitys; private String key; private Map factories; public ConverterFactories(String url, String key, Map factories, List outEntitys) { this.url = url; this.key = key; this.factories = factories; this.outEntitys = outEntitys; } @Override public void run() { //只打印错误日志 avutil.av_log_set_level(AV_LOG_ERROR); boolean isCloseGrabberAndResponse = true; try { grabber = new FFmpegFrameGrabber(url); if ("rtsp".equals(url.substring(0, 4))) { grabber.setOption("rtsp_transport", "tcp"); grabber.setOption("stimeout", "5000000"); } grabber.start(); if (avcodec.AV_CODEC_ID_H264 == grabber.getVideoCodec() && (grabber.getAudiochannels() == 0 || avcodec.AV_CODEC_ID_AAC == grabber.getAudioCodec())) { // 来源视频H264格式,音频AAC格式 // 无须转码,更低的资源消耗,更低的延迟 log.info("开始从RTSP协议中取流:{}", url); stream = new ByteArrayOutputStream(); recorder = new FFmpegFrameRecorder(stream, grabber.getImageWidth(), grabber.getImageHeight(), grabber.getAudioChannels()); recorder.setInterleaved(true); recorder.setVideoOption("preset", "ultrafast"); recorder.setVideoOption("tune", "zerolatency"); recorder.setVideoOption("crf", "25"); recorder.setFrameRate(grabber.getFrameRate()); recorder.setSampleRate(grabber.getSampleRate()); if (grabber.getAudioChannels() > 0) { recorder.setAudioChannels(grabber.getAudioChannels()); recorder.setAudioBitrate(grabber.getAudioBitrate()); recorder.setAudioCodec(grabber.getAudioCodec()); } recorder.setFORMat("flv"); recorder.setVideoBitrate(grabber.getVideoBitrate()); recorder.setVideoCodec(grabber.getVideoCodec()); recorder.start(grabber.getFormatContext()); if (headers == null) { headers = stream.toByteArray(); stream.reset(); writeResponse(headers); } long runTime,stopTime; runTime = stopTime = System.currentTimeMillis(); while (runing) { AVPacket k = grabber.grabpacket(); if (k != null) { try {recorder.recordPacket(k); } catch (Exception ignored) { } if (stream.size() > 0) {byte[] b = stream.toByteArray();stream.reset();writeResponse(b);if (outEntitys.isEmpty()) {// log.info("没有输出退出"); break;} } avcodec.av_packet_unref(k); runTime = System.currentTimeMillis(); } else { stopTime = System.currentTimeMillis(); } if ((stopTime - runTime) > 10000) { break; } } } else { log.info("开始从RTSP协议中取流:{}", url); isCloseGrabberAndResponse = false; // 需要转码为视频H264格式,音频AAC格式 ConverterTranFactories c = new ConverterTranFactories(url, key, factories, outEntitys, grabber); factories.put(key, c); c.setName("flv-tran-[" + url + "]"); c.start(); } } catch (Exception e) { log.error(e.getMessage(), e); } finally { closeConverter(isCloseGrabberAndResponse); completeResponse(isCloseGrabberAndResponse); } } public void writeResponse(byte[] b) { Iterator it = outEntitys.iterator(); while (it.hasNext()) { AsyncContext o = it.next(); try { o.getResponse().getOutputStream().write(b); } catch (Exception e) {// log.info("移除一个输出"); it.remove(); } } } public void closeConverter(boolean isCloseGrabberAndResponse) { if (isCloseGrabberAndResponse) { IOUtils.close(grabber); factories.remove(this.key); } IOUtils.close(recorder); IOUtils.close(stream); } public void completeResponse(boolean isCloseGrabberAndResponse) { if (isCloseGrabberAndResponse) { for (AsyncContext o : outEntitys) { o.complete(); } } } @Override public String getKey() { return this.key; } @Override public String getUrl() { return this.url; } @Override public void addOutputStreamEntity(String key, AsyncContext entity) throws IOException { if (headers == null) { outEntitys.add(entity); } else { entity.getResponse().getOutputStream().write(headers); entity.getResponse().getOutputStream().flush(); outEntitys.add(entity); } } @Override public void exit() { this.runing = false; try { this.join(); } catch (Exception e) { log.error(e.getMessage(), e); } } @Override public int respNum() { return outEntitys.size(); } @Override public LocalDate obtainCreatedDate() { return date; }} 第三个类:import com.alibaba.fastjson.util.IOUtils;import lombok.extern.slf4j.Slf4j;import org.bytedeco.ffmpeg.global.avcodec;import org.bytedeco.ffmpeg.global.avutil;import org.bytedeco.javacv.FFmpegFrameGrabber;import org.bytedeco.javacv.FFmpegFrameRecorder;import org.bytedeco.javacv.Frame;import javax.servlet.AsyncContext;import java.io.ByteArrayOutputStream;import java.io.IOException;import java.time.LocalDate;import java.util.Iterator;import java.util.List;import java.util.Map;import static org.bytedeco.ffmpeg.global.avutil.AV_LOG_ERROR;@Slf4jpublic class ConverterTranFactories extends Thread implements Converter { public volatile boolean runing = true; private final LocalDate date = LocalDate.now(); private FFmpegFrameGrabber grabber; private FFmpegFrameRecorder recorder; private byte[] headers; private ByteArrayOutputStream stream; private String url; private List outEntitys; private String key; private Map factories; public ConverterTranFactories(String url, String key, Map factories, List outEntitys, FFmpegFrameGrabber grabber) { this.url = url; this.key = key; this.factories = factories; this.outEntitys = outEntitys; this.grabber = grabber; } @Override public void run() { //只打印错误日志 avutil.av_log_set_level(AV_LOG_ERROR); try { grabber.setFrameRate(25); if (grabber.getImageWidth() > 1920) { grabber.setImageWidth(1920); } if (grabber.getImageHeight() > 1080) { grabber.setImageHeight(1080); } stream = new ByteArrayOutputStream(); recorder = new FFmpegFrameRecorder(stream, grabber.getImageWidth(), grabber.getImageHeight(), grabber.getAudioChannels()); recorder.setInterleaved(true); recorder.setVideoOption("preset", "ultrafast"); recorder.setVideoOption("tune", "zerolatency"); recorder.setVideoOption("crf", "25"); recorder.setVideoOption("colorspace", "bt709"); recorder.setVideoOption("color_range", "jpeg"); recorder.setGopSize(50); recorder.setFrameRate(25); recorder.setPixelFormat(tranFormat(grabber.getPixelFormat())); recorder.setSampleRate(grabber.getSampleRate()); if (grabber.getAudioChannels() > 0) { recorder.setAudioChannels(grabber.getAudioChannels()); recorder.setAudioBitrate(grabber.getAudioBitrate()); recorder.setAudioCodec(avcodec.AV_CODEC_ID_AAC); } recorder.setFormat("flv"); recorder.setVideoBitrate(grabber.getVideoBitrate()); recorder.setVideoCodec(avcodec.AV_CODEC_ID_H264); recorder.start(); if (headers == null) { headers = stream.toByteArray(); stream.reset(); writeResponse(headers); } long runTime, stopTime; runTime = stopTime = System.currentTimeMillis(); while (runing) { Frame f = grabber.grab(); if (f != null) { try { recorder.record(f); } catch (Exception ignored) { } if (stream.size() > 0) { byte[] b = stream.toByteArray(); stream.reset(); writeResponse(b); if (outEntitys.isEmpty()) {log.info("没有输出退出");break; } } runTime = System.currentTimeMillis(); } else { stopTime = System.currentTimeMillis(); } if ((stopTime - runTime) > 10000) { break; } } } catch (Exception e) { log.error(e.getMessage(), e); } finally { closeConverter(); completeResponse(); log.info("RTSP取流正常关闭:{}", url); factories.remove(this.key); } } private int tranFormat(int format) { switch (format) { case avutil.AV_PIX_FMT_YUVJ420P: { return avutil.AV_PIX_FMT_YUV420P; } case avutil.AV_PIX_FMT_YUVJ422P: { return avutil.AV_PIX_FMT_YUV422P; } case avutil.AV_PIX_FMT_YUVJ444P: { return avutil.AV_PIX_FMT_YUV444P; } case avutil.AV_PIX_FMT_YUVJ440P: { return avutil.AV_PIX_FMT_YUV440P; } default: { return avutil.AV_PIX_FMT_NONE; } } } public void writeResponse(byte[] b) { Iterator it = outEntitys.iterator(); while (it.hasNext()) { AsyncContext o = it.next(); try { o.getResponse().getOutputStream().write(b); } catch (Exception e) { log.info("移除一个输出"); it.remove(); } } } public void closeConverter() { IOUtils.close(grabber); IOUtils.close(recorder); IOUtils.close(stream); } public void completeResponse() { for (AsyncContext o : outEntitys) { o.complete(); } } @Override public String getKey() { return this.key; } @Override public String getUrl() { return this.url; } @Override public void addOutputStreamEntity(String key, AsyncContext entity) throws IOException { if (headers == null) { outEntitys.add(entity); } else { entity.getResponse().getOutputStream().write(headers); entity.getResponse().getOutputStream().flush(); outEntitys.add(entity); } } @Override public void exit() { this.runing = false; try { this.join(); } catch (Exception e) { log.error(e.getMessage(), e); } } @Override public int respNum() { return outEntitys.size(); } @Override public LocalDate obtainCreatedDate() { return date; }} controller层接口,注意这里的url就是rtsp地址,但是进行了BASE64的加密,所以你可以让前端把地址加密后传给你,也可以自己加密一下 @GetMapping(value = "/monitor/live") public void open2(String url, httpservletResponse response, HttpServletRequest request) { itdMonitorService.open(new String(Base64.getDecoder().decode(url)), response, request); }service层:接口我就不贴了,你们自己根据impl的方法名创建一个就行 @Override public void open(String url, HttpServletResponse response, HttpServletRequest request) { String key = md5(url); AsyncContext async = request.startAsync(); async.setTimeout(0); if (converters.containsKey(key)) { Converter c = converters.get(key); try { c.addOutputStreamEntity(key, async); } catch (IOException e) { log.error(e.getMessage(), e); throw new IllegalArgumentException(e.getMessage()); } } else { List outs = Lists.newArrayList(); outs.add(async); ConverterFactories c = new ConverterFactories(url, key, converters, outs); c.setName("flv-[" + url + "]"); c.start(); converters.put(key, c); }// response.setContentType("video/x-flv"); response.setHeader("Connection", "keep-alive"); response.setStatus(HttpServletResponse.SC_OK); try { response.flushBuffer(); } catch (IOException e) { log.error(e.getMessage(), e); } } 相关的pom.xml 注意不一样的系统下面的properties要改一改 org.bytedeco javacv 1.5.6 org.bytedeco javacpp 1.5.6 org.bytedeco OpenCV 4.5.3-1.5.6 ${transcode-platform} org.bytedeco openblas 0.3.17-1.5.6 ${transcode-platform} org.bytedeco ffmpeg 4.4-1.5.6 ${transcode-platform} 8 8 windows-x86_64 到这里实时预览就完成了,很简单对不对,全靠CV就行 :)

下面贴出抓图的代码,需求是实时的根据前端的需要进行设备的抓图,ok fine ,前端是大哥,叫我抓就抓呗…

首先SDK接口的调用步骤和最开始说的是一样的,即:加载DLL库 >> 初始化SDK >> 登录设备 >> 抓图 >>注销设备 >> 释放SDK资源

但是我们在程序加载之初就已经初始化sdk和加载DLL库过了,所以直接开始登录然后抓图就行:

controller: @GetMapping("/monitor/cutPic") public AjaxResult cutPic(String serialNo) { return itdMonitorService.cutPic(serialNo); }service同样只贴实现类: @Override public AjaxResult cutPic(String serialNo) { TDMonitor monitor = monitorMapper.findCameraInfoById(serialNo); Device dvr = new Device(monitor.getCameraIP(), monitor.getUsername(), monitor.getPassword(), (short) 8000); new HCUtils().getDVRPic(dvr, "E:\\pic\\"); return AjaxResult.success(); }剩下的一些相关的实体类或者DTO、VO之类的:

设备登陆参数类:import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;@Data@AllArgsConstructor@NoArgsConstructorpublic class Device { private String ip; private String userName; private String passWord; private short port = 8000; public String getIp() { return ip; } public void setIp(String ip) { this.ip = ip; } public String getUserName() { return userName; } public void setUserName(String userName) { this.userName = userName; } public String getPassWord() { return passWord; } public void setPassWord(String passWord) { this.passWord = passWord; }}SDK回调函数:package cn.com.guanfang.SDK;import com.sun.jna.Pointer;import com.sun.jna.ptr.ByteByReference;import java.NIO.ByteBuffer;import static cn.com.guanfang.SDK.HCNetSDK.NET_DVR_STREAMDATA;public class FRealDataCallBack implements HCNetSDK.FRealDataCallBack_V30 { @Override public void invoke(int lRealHandle, int dwDataType, ByteByReference pBuffer, int dwBufSize, Pointer pUser) { switch (dwDataType) { case NET_DVR_STREAMDATA://码流数据 long offset = 0; ByteBuffer buffers = pBuffer.getPointer().getByteBuffer(offset, dwBufSize); byte[] bytes = new byte[dwBufSize]; buffers.rewind(); buffers.get(bytes); try { //从map中取出对应的流读取数据// outputStreamMap.get(lRealHandle).write(bytes); } catch (Exception e) { e.printStackTrace(); } } }}DLL资源的加载public class osSelect { public static boolean isLinux() { return System.getProperty("os.name").toLowerCase().contains("linux"); } public static boolean isWindows() { return System.getProperty("os.name").toLowerCase().contains("windows"); }}完工,如果发现代码有遗漏没有贴上来的可以评论或者私信我,我会补上的,还有这玩意儿写了我一上午,你们要是白嫖了还不给我点赞那你们和嫖完撒腿就跑不给钱的人有什么区别!!!

来源地址:https://blog.csdn.net/weixin_47180824/article/details/127315148

--结束END--

本文标题: 海康威视摄像头对接SDK实时预览功能和抓拍功能,懒癌福利,可直接CV

本文链接: https://www.lsjlt.com/news/371727.html(转载时请注明来源链接)

有问题或投稿请发送至: 邮箱/279061341@qq.com QQ/279061341

2024-04-01

2024-04-03

2024-04-03

2024-01-21

2024-01-21

2024-01-21

2024-01-21

2023-12-23

回答

回答

回答

回答

回答

回答

回答

回答

回答

回答

0