Python 官方文档:入门教程 => 点击学习

YOLOv5-seg数据集制作、模型训练以及TensorRT部署 版本声明一、数据集制作:图像 Json转txt二、分割模型训练三 tensorRT部署1 模型导出2 onnx转trtmode

yolov5-seg:官方地址:https://github.com/ultralytics/yolov5/tree/v6.2

TensorRT:8.x.x

语言:c++

系统:ubuntu18.04

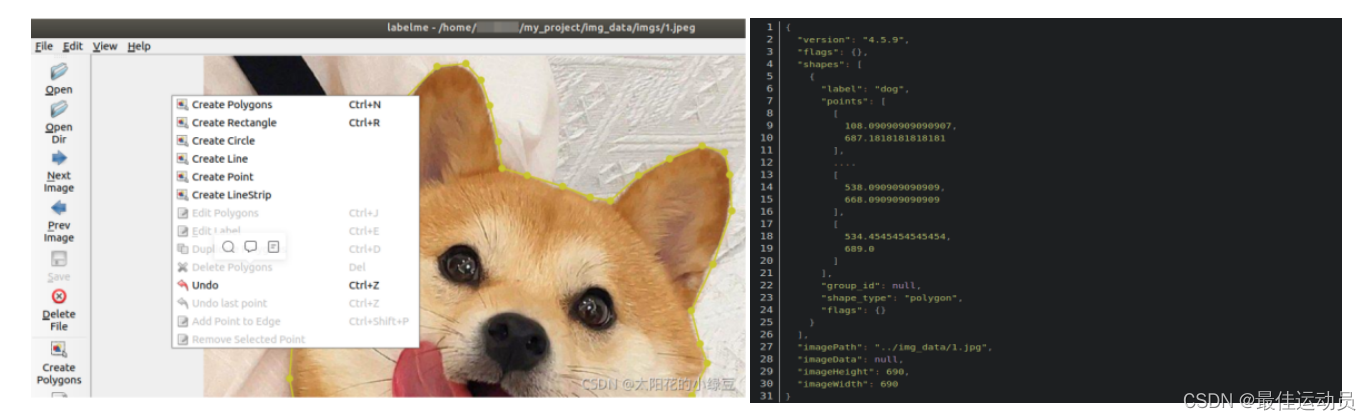

前言:由于yolo仓中提供了标准coco的json文件转txt代码,因此需要将labelme的json文件转为coco json.

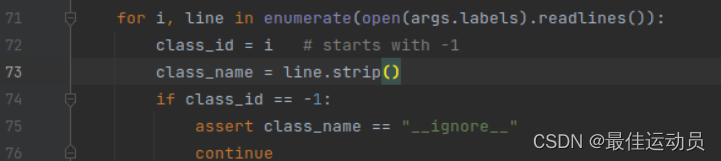

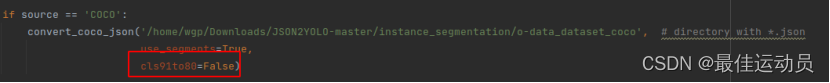

注意:由于自定义的数据集里面标签从0开始 不包括背景 直接转换会报错。修改72行。

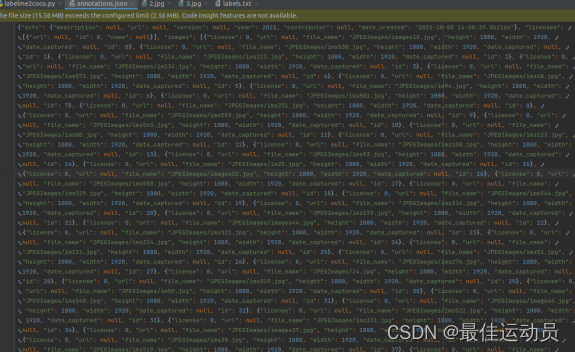

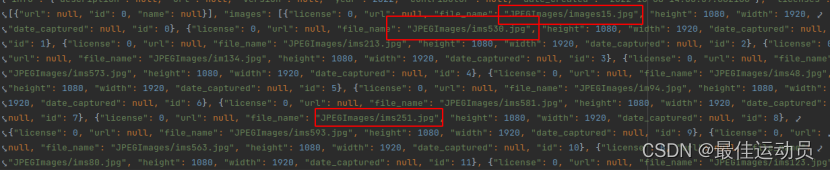

生成三个文件JPEGImages、 Visualization 、annotations.json

JPEGImages中为原图,annotations.json里面是coco格式的文件:

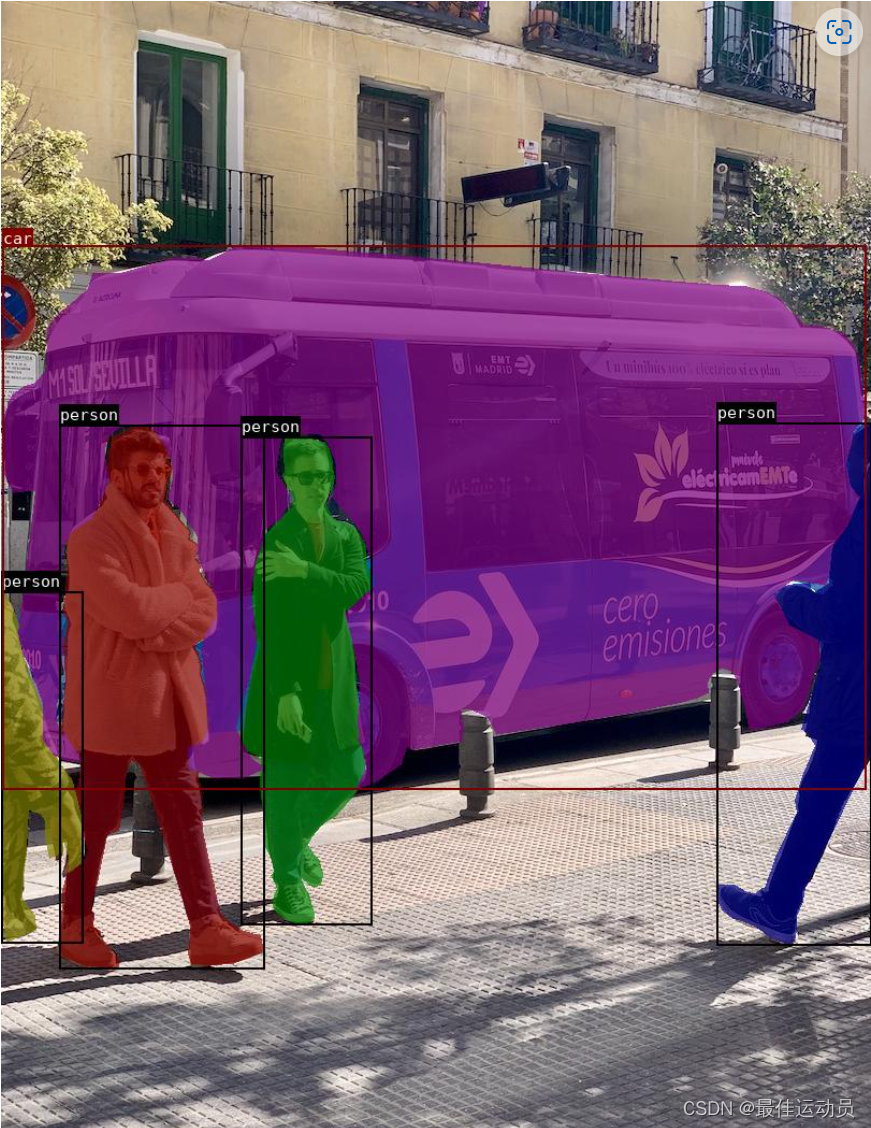

Visualization中的图如下:

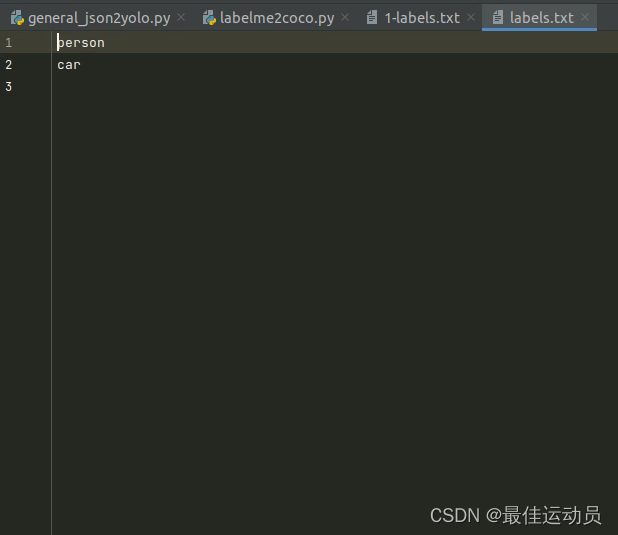

转换前需要自定义label.txt

再次运行,报下一个错误:

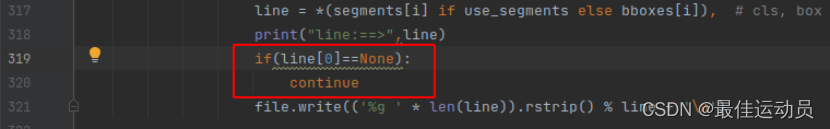

TypeError: must be real number, not NoneType

错误指向:

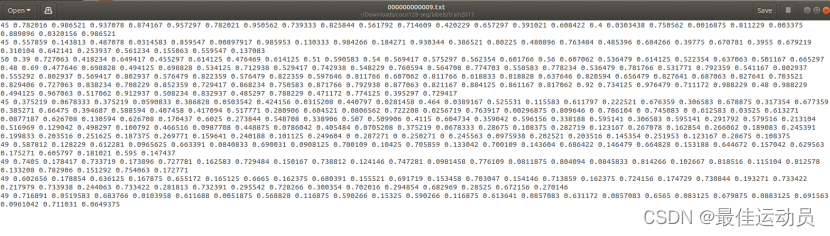

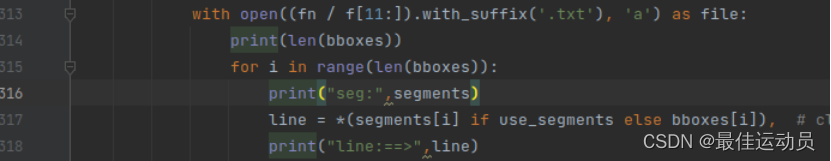

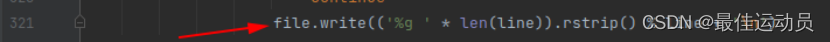

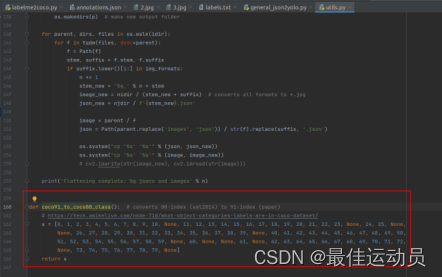

观察文件夹中,已经生成一个xxx.txt且有部分数据,打印line之后发现数据里有[None,point…point]这样的数据。 大体知道了:应该是生成了背景类且没有标签。修改代码跳过这些标签:

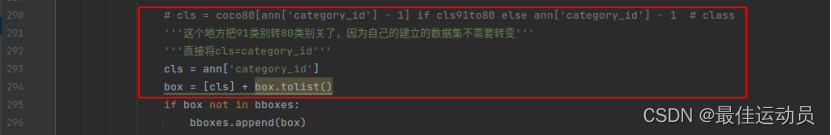

再次运行报错消失,执行完毕没有报错。以为成功了打开txt一个最大的标签仅仅为13,应该是到15(我的数据集一共十六类),中间有几类被消除了,排查错误。应该是这个地方把91–>80类的函数的问题。修改一番,两个地方。(若只修改第二处 会出现-1标签,最高到14)

也可以只修改第二处:再修改代码:

也可以只修改第二处:再修改代码:

下面展示一些 内联代码片。

cls = coco80[ann['category_id'] - 1] if cls91to80 else ann['category_id'] - 1 # classcls = coco80[ann['category_id']] if cls91to80 else ann['category_id'] - 1 # classcoco91_to_coco80_class()函数:

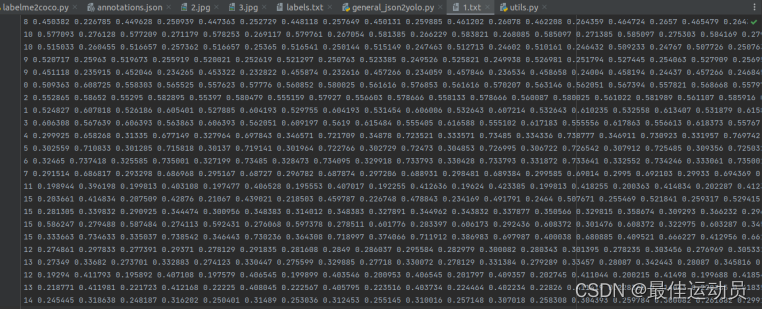

排除完毕以上错误时,再次运行,没有错误了。

import contextlibimport jsonimport cv2import pandas as pdfrom PIL import Imagefrom collections import defaultdictfrom utils import *# Convert INFOLKS JSON file into YOLO-fORMat labels ----------------------------def convert_infolks_json(name, files, img_path): # Create folders path = make_dirs() # Import json data = [] for file in glob.glob(files): with open(file) as f: jdata = json.load(f) jdata['json_file'] = file data.append(jdata) # Write images and shapes name = path + os.sep + name file_id, file_name, wh, cat = [], [], [], [] for x in tqdm(data, desc='Files and Shapes'): f = glob.glob(img_path + Path(x['json_file']).stem + '.*')[0] file_name.append(f) wh.append(exif_size(Image.open(f))) # (width, height) cat.extend(a['classTitle'].lower() for a in x['output']['objects']) # categories # filename with open(name + '.txt', 'a') as file: file.write('%s\n' % f) # Write *.names file names = sorted(np.unique(cat)) # names.pop(names.index('Missing product')) # remove with open(name + '.names', 'a') as file: [file.write('%s\n' % a) for a in names] # Write labels file for i, x in enumerate(tqdm(data, desc='Annotations')): label_name = Path(file_name[i]).stem + '.txt' with open(path + '/labels/' + label_name, 'a') as file: for a in x['output']['objects']: # if a['classTitle'] == 'Missing product': # continue # skip category_id = names.index(a['classTitle'].lower()) # The INFOLKS bounding box format is [x-min, y-min, x-max, y-max] box = np.array(a['points']['exterior'], dtype=np.float32).ravel() box[[0, 2]] /= wh[i][0] # normalize x by width box[[1, 3]] /= wh[i][1] # normalize y by height box = [box[[0, 2]].mean(), box[[1, 3]].mean(), box[2] - box[0], box[3] - box[1]] # xywh if (box[2] > 0.) and (box[3] > 0.): # if w > 0 and h > 0 file.write('%g %.6f %.6f %.6f %.6f\n' % (category_id, *box)) # Split data into train, test, and validate files split_files(name, file_name) write_data_data(name + '.data', nc=len(names)) print(f'Done. Output saved to {os.getcwd() + os.sep + path}')# Convert vott JSON file into YOLO-format labels -------------------------------def convert_vott_json(name, files, img_path): # Create folders path = make_dirs() name = path + os.sep + name # Import json data = [] for file in glob.glob(files): with open(file) as f: jdata = json.load(f) jdata['json_file'] = file data.append(jdata) # Get all categories file_name, wh, cat = [], [], [] for i, x in enumerate(tqdm(data, desc='Files and Shapes')): with contextlib.suppress(Exception): cat.extend(a['tags'][0] for a in x['regions']) # categories # Write *.names file names = sorted(pd.unique(cat)) with open(name + '.names', 'a') as file: [file.write('%s\n' % a) for a in names] # Write labels file n1, n2 = 0, 0 missing_images = [] for i, x in enumerate(tqdm(data, desc='Annotations')): f = glob.glob(img_path + x['asset']['name'] + '.jpg') if len(f): f = f[0] file_name.append(f) wh = exif_size(Image.open(f)) # (width, height) n1 += 1 if (len(f) > 0) and (wh[0] > 0) and (wh[1] > 0): n2 += 1 # append filename to list with open(name + '.txt', 'a') as file: file.write('%s\n' % f) # write labelsfile label_name = Path(f).stem + '.txt' with open(path + '/labels/' + label_name, 'a') as file: for a in x['regions']: category_id = names.index(a['tags'][0]) # The INFOLKS bounding box format is [x-min, y-min, x-max, y-max] box = a['boundingBox'] box = np.array([box['left'], box['top'], box['width'], box['height']]).ravel() box[[0, 2]] /= wh[0] # normalize x by width box[[1, 3]] /= wh[1] # normalize y by height box = [box[0] + box[2] / 2, box[1] + box[3] / 2, box[2], box[3]] # xywh if (box[2] > 0.) and (box[3] > 0.): # if w > 0 and h > 0file.write('%g %.6f %.6f %.6f %.6f\n' % (category_id, *box)) else: missing_images.append(x['asset']['name']) print('Attempted %g json imports, found %g images, imported %g annotations successfully' % (i, n1, n2)) if len(missing_images): print('WARNING, missing images:', missing_images) # Split data into train, test, and validate files split_files(name, file_name) print(f'Done. Output saved to {os.getcwd() + os.sep + path}')# Convert ath JSON file into YOLO-format labels --------------------------------def convert_ath_json(json_dir): # dir contains json annotations and images # Create folders dir = make_dirs() # output directory jsons = [] for dirpath, dirnames, filenames in os.walk(json_dir): jsons.extend( os.path.join(dirpath, filename) for filename in [ f for f in filenames if f.lower().endswith('.json') ] ) # Import json n1, n2, n3 = 0, 0, 0 missing_images, file_name = [], [] for json_file in sorted(jsons): with open(json_file) as f: data = json.load(f) # # Get classes # try: # classes = list(data['_via_attributes']['region']['class']['options'].values()) # classes # except: # classes = list(data['_via_attributes']['region']['Class']['options'].values()) # classes # # Write *.names file # names = pd.unique(classes) # preserves sort order # with open(dir + 'data.names', 'w') as f: # [f.write('%s\n' % a) for a in names] # Write labels file for x in tqdm(data['_via_img_metadata'].values(), desc=f'Processing {json_file}'): image_file = str(Path(json_file).parent / x['filename']) f = glob.glob(image_file) # image file if len(f): f = f[0] file_name.append(f) wh = exif_size(Image.open(f)) # (width, height) n1 += 1 # all images if len(f) > 0 and wh[0] > 0 and wh[1] > 0: label_file = dir + 'labels/' + Path(f).stem + '.txt' nlabels = 0 try: with open(label_file, 'a') as file: # write labelsfile# try:# category_id = int(a['region_attributes']['class'])# except:# category_id = int(a['region_attributes']['Class'])category_id = 0 # single-classfor a in x['regions']: # bounding box format is [x-min, y-min, x-max, y-max] box = a['shape_attributes'] box = np.array([box['x'], box['y'], box['width'], box['height']], dtype=np.float32).ravel() box[[0, 2]] /= wh[0] # normalize x by width box[[1, 3]] /= wh[1] # normalize y by height box = [box[0] + box[2] / 2, box[1] + box[3] / 2, box[2], box[3]] # xywh (left-top to center x-y) if box[2] > 0. and box[3] > 0.: # if w > 0 and h > 0 file.write('%g %.6f %.6f %.6f %.6f\n' % (category_id, *box)) n3 += 1 nlabels += 1 if nlabels == 0: # remove non-labelled images from datasetos.system(f'rm {label_file}')# print('no labels for %s' % f)continue # next file # write image img_size = 4096 # resize to maximum img = cv2.imread(f) # BGR assert img is not None, 'Image Not Found ' + f r = img_size / max(img.shape) # size ratio if r < 1: # downsize if necessaryh, w, _ = img.shapeimg = cv2.resize(img, (int(w * r), int(h * r)), interpolation=cv2.INTER_AREA) ifile = dir + 'images/' + Path(f).name if cv2.imwrite(ifile, img): # if success append image to listwith open(dir + 'data.txt', 'a') as file: file.write('%s\n' % ifile)n2 += 1 # correct images except Exception: os.system(f'rm {label_file}') print(f'problem with {f}') else: missing_images.append(image_file) nm = len(missing_images) # number missing print('\nFound %g JSONs with %g labels over %g images. Found %g images, labelled %g images successfully' % (len(jsons), n3, n1, n1 - nm, n2)) if len(missing_images): print('WARNING, missing images:', missing_images) # Write *.names file names = ['knife'] # preserves sort order with open(dir + 'data.names', 'w') as f: [f.write('%s\n' % a) for a in names] # Split data into train, test, and validate files split_rows_simple(dir + 'data.txt') write_data_data(dir + 'data.data', nc=1) print(f'Done. Output saved to {Path(dir).absolute()}')def convert_coco_json(json_dir='../coco/annotations/', use_segments=False, cls91to80=False): save_dir = make_dirs() # output directory coco80 = coco91_to_coco80_class() # Import json for json_file in sorted(Path(json_dir).resolve().glob('*.json')): fn = Path(save_dir) / 'labels' / json_file.stem.replace('instances_', '') # folder name fn.mkdir() with open(json_file) as f: data = json.load(f) print(data) # Create image dict images = {'%g' % x['id']: x for x in data['images']} # Create image-annotations dict imgToAnns = defaultdict(list) for ann in data['annotations']: imgToAnns[ann['image_id']].append(ann) # Write labels file for img_id, anns in tqdm(imgToAnns.items(), desc=f'Annotations {json_file}'): img = images['%g' % img_id] h, w, f = img['height'], img['width'], img['file_name'] bboxes = [] segments = [] for ann in anns: if ann['iscrowd']: continue # The COCO box format is [top left x, top left y, width, height] box = np.array(ann['bbox'], dtype=np.float64) box[:2] += box[2:] / 2 # xy top-left corner to center box[[0, 2]] /= w # normalize x box[[1, 3]] /= h # normalize y if box[2] <= 0 or box[3] <= 0: # if w <= 0 and h <= 0 continue #cls = coco80[ann['category_id'] - 1] if cls91to80 else ann['category_id'] - 1 # class '''这个地方把91类别转80类别关了,因为自己的建立的数据集不需要转变''' '''直接将cls=category_id''' cls = ann['category_id'] box = [cls] + box.tolist() if box not in bboxes: bboxes.append(box) # Segments if use_segments: if len(ann['segmentation']) > 1: s = merge_multi_segment(ann['segmentation']) s = (np.concatenate(s, axis=0) / np.array([w, h])).reshape(-1).tolist() else: s = [j for i in ann['segmentation'] for j in i] # all segments concatenated s = (np.array(s).reshape(-1, 2) / np.array([w, h])).reshape(-1).tolist() s = [cls] + s if s not in segments: segments.append(s) # Write print("fn/f==>",fn/f[11:]) print("fn==>",fn) print("f==>",f) with open((fn / f[11:]).with_suffix('.txt'), 'a') as file: print(len(bboxes)) for i in range(len(bboxes)): print("seg:",segments) line = *(segments[i] if use_segments else bboxes[i]), # cls, box or segments print("line:==>",line) if(line[0]==None): continue file.write(('%g ' * len(line)).rstrip() % line + '\n')def min_index(arr1, arr2): """Find a pair of indexes with the shortest distance. Args: arr1: (N, 2). arr2: (M, 2). Return: a pair of indexes(tuple). """ dis = ((arr1[:, None, :] - arr2[None, :, :]) ** 2).sum(-1) return np.unravel_index(np.argmin(dis, axis=None), dis.shape)def merge_multi_segment(segments): """Merge multi segments to one list. Find the coordinates with min distance between each segment, then connect these coordinates with one thin line to merge all segments into one. Args: segments(List(List)): original segmentations in coco's json file. like [segmentation1, segmentation2,...], each segmentation is a list of coordinates. """ s = [] segments = [np.array(i).reshape(-1, 2) for i in segments] idx_list = [[] for _ in range(len(segments))] # record the indexes with min distance between each segment for i in range(1, len(segments)): idx1, idx2 = min_index(segments[i - 1], segments[i]) idx_list[i - 1].append(idx1) idx_list[i].append(idx2) # use two round to connect all the segments for k in range(2): # forward connection if k == 0: for i, idx in enumerate(idx_list): # middle segments have two indexes # reverse the index of middle segments if len(idx) == 2 and idx[0] > idx[1]: idx = idx[::-1] segments[i] = segments[i][::-1, :] segments[i] = np.roll(segments[i], -idx[0], axis=0) segments[i] = np.concatenate([segments[i], segments[i][:1]]) # deal with the first segment and the last one if i in [0, len(idx_list) - 1]: s.append(segments[i]) else: idx = [0, idx[1] - idx[0]] s.append(segments[i][idx[0]:idx[1] + 1]) else: for i in range(len(idx_list) - 1, -1, -1): if i not in [0, len(idx_list) - 1]: idx = idx_list[i] nidx = abs(idx[1] - idx[0]) s.append(segments[i][nidx:]) return sdef delete_dsstore(path='../datasets'): # Delete apple .DS_store files from pathlib import Path files = list(Path(path).rglob('.DS_store')) print(files) for f in files: f.unlink()if __name__ == '__main__': source = 'COCO' if source == 'COCO': convert_coco_json('写自己的路径', # directory with *.json use_segments=True, cls91to80=False) elif source == 'infolks': # Infolks Https://infolks.info/ convert_infolks_json(name='out', files='../data/sm4/json/*.json', img_path='../data/sm4/images/') elif source == 'vott': # VoTT https://github.com/microsoft/VoTT convert_vott_json(name='data', files='../../Downloads/athena_day/20190715/*.json', img_path='../../Downloads/athena_day/20190715/') # images folder elif source == 'ath': # ath format convert_ath_json(json_dir='../../Downloads/athena/') # images folder # zip results # os.system('zip -r ../coco.zip ../coco')训练的步骤和目标检测模型一致,下载模型 yolov5s-seg.pt,划分数据集 、修改配置文件、不再详述了。

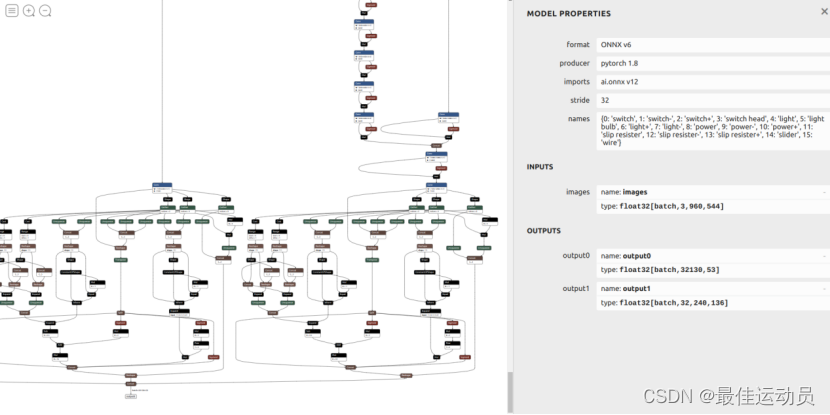

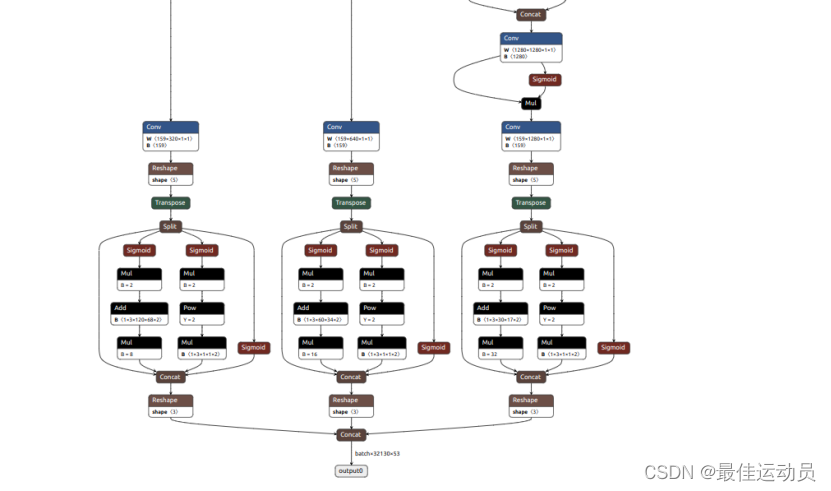

使用官方的export.py文件直接导出时,netron可视化之后如下:

onnx比较混乱,需要进一步修改,所有的修改如下,参考杜老的仓link:https://github.com/shouxieai/learning-cuda-trt/tree/main:

# line 55 forward function in yolov5/models/yolo.py # bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)# x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()# modified into:bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)bs = -1ny = int(ny)nx = int(nx)x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()# line 70 in yolov5/models/yolo.py# z.append(y.view(bs, -1, self.no))# modified into:z.append(y.view(bs, self.na * ny * nx, self.no))############# for yolov5-6.0 ###################### line 65 in yolov5/models/yolo.py# if self.grid[i].shape[2:4] != x[i].shape[2:4] or self.onnx_dynamic:# self.grid[i], self.anchor_grid[i] = self._make_grid(nx, ny, i)# modified into:if self.grid[i].shape[2:4] != x[i].shape[2:4] or self.onnx_dynamic: self.grid[i], self.anchor_grid[i] = self._make_grid(nx, ny, i)# disconnect for PyTorch traceanchor_grid = (self.anchors[i].clone() * self.stride[i]).view(1, -1, 1, 1, 2)# line 70 in yolov5/models/yolo.py# y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh# modified into:y[..., 2:4] = (y[..., 2:4] * 2) ** 2 * anchor_grid # wh# line 73 in yolov5/models/yolo.py# wh = (y[..., 2:4] * 2) ** 2 * self.anchor_grid[i] # wh# modified into:wh = (y[..., 2:4] * 2) ** 2 * anchor_grid # wh############# for yolov5-6.0 ###################### line 77 in yolov5/models/yolo.py# return x if self.training else (torch.cat(z, 1), x)# modified into:return x if self.training else torch.cat(z, 1)# line 52 in yolov5/export.py# torch.onnx.export(dynamic_axes={'images': {0: 'batch', 2: 'height', 3: 'width'}, # shape(1,3,640,640)# 'output': {0: 'batch', 1: 'anchors'} # shape(1,25200,85) 修改为# modified into:torch.onnx.export(dynamic_axes={'images': {0: 'batch'}, # shape(1,3,640,640) 'output': {0: 'batch'} # shape(1,25200,85) 由于版本不同修改的地方也稍有改变

修改后:

导出指令:python export.py --weights runs/train-seg/exp3/weights/best.pt --include onnx --dynamic

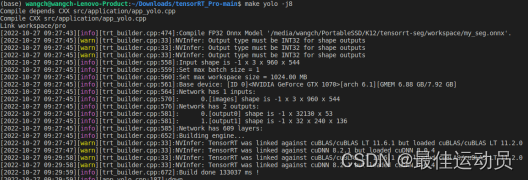

TRT::compile( mode, // FP32、FP16、INT8 test_batch_size, // max batch size onnx_file, // source model_file, // save to {}, int8process, "inference" );

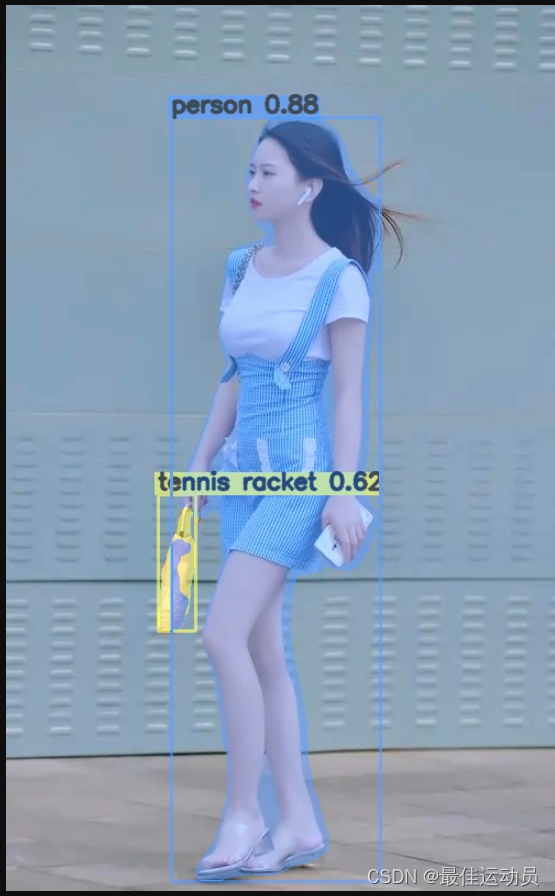

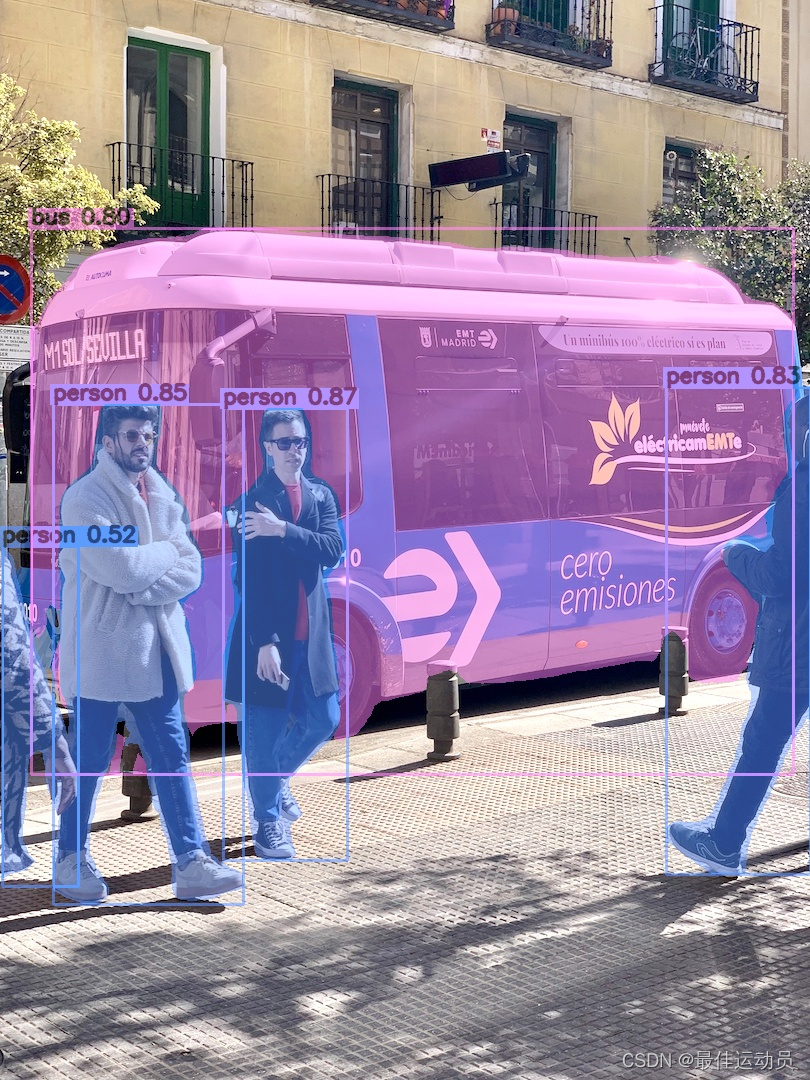

static void inference(Type type, TRT::Mode mode, const string& model_file){ auto engine = TRT::load_infer(model_file); if(engine == nullptr){ INFOE("Engine is nullptr"); return; } auto image = cv::imread("xxx.jpg"); //绘制结果 int col=image.cols; //1920 int row=image.rows; //1080 Mat mask_seg=image.clone(); Mat mask_box=image.clone();//3 channel Mat cut_img=image.clone();auto input = engine->tensor("images"); // engine->input(0); auto output = engine->tensor("output0"); // engine->output(1);//[batch , 32130 , 53] auto output1 = engine->tensor("output1"); // (batch, 32, 136, 240) ==>(16,32,136,240) int num_bboxes = output->size(1);//32130 int num_classes = output->size(2) - 5 ; float confidence_threshold = 0.5; float nms_threshold = 0.45; int MAX_IMAGE_BBOX = 1000; int NUM_BOX_ELEMENT = 39; // left, top, right, bottom, confidence, class, keepflag ,32 mask int netWidth = 640; int netHeigh = 640; int segWidth = 160; int segHeight = 160; float mask_thresh = 0.2; TRT::Tensor output_array_device(TRT::DataType::Float); // use max = 1 batch to inference. int max_batch_size = 1; input->resize_single_dim(0, max_batch_size).to_gpu(); output_array_device.resize(max_batch_size, 1 + MAX_IMAGE_BBOX * NUM_BOX_ELEMENT).to_gpu(); output_array_device.set_stream(engine->get_stream()); // set batch = 1 image int ibatch = 0; image_to_tensor(image, input, type, ibatch); // do async 异步 engine->forward(false);float* output_ptr = output1->cpu<float>();//vector 2 mat int size[]={32,segHeight,segWidth}; //cout<<"size"< cv::Mat mask_protos = cv::Mat_<float>(3,size,CV_8UC1); for(int iii=0;iii<32;iii++) { //unchar *data=mask_protos.ptr(iii); for(int jjj=0;jjj<segHeight;jjj++) { //unchar *data2=data.ptr(jjj); for(int kkk=0;kkk<segWidth;kkk++) { //data2[kkk]=output_ptr[iii*136*240+jjj*240+kkk]; mask_protos.at<float>(iii,jjj,kkk)=output_ptr[iii*segHeight*segWidth+jjj*segWidth+kkk]; } } } float* d2i_affine_matrix = static_cast<float*>(input->get_workspace()->gpu()); Yolo::decode_kernel_invoker( output->gpu<float>(ibatch), num_bboxes, num_classes, confidence_threshold, d2i_affine_matrix, output_array_device.gpu<float>(ibatch), MAX_IMAGE_BBOX, engine->get_stream() ); Yolo::nms_kernel_invoker( output_array_device.gpu<float>(ibatch), nms_threshold, MAX_IMAGE_BBOX, engine->get_stream() ); float* parray = output_array_device.cpu<float>(); int num_box = min(static_cast<int>(*parray), MAX_IMAGE_BBOX);//取最小值 //new a mat and new a vector Mat mask_proposals; vector<OutputSeg> f_output; vector<vector<float>>proposal; //[23,32] output0 =>mask int num_box1=0; Rect holeImgRect(0, 0, col, row); for(int i = 0; i < num_box; ++i){ //遍历所有的框 float* pbox = parray + 1 + i * NUM_BOX_ELEMENT;//+1+i*7 1:表示这个数组的元素数量 int keepflag = pbox[6]; vector<float> temp; OutputSeg result; if(keepflag == 1 ){ num_box1+=1; // left, top, right, bottom, confidence,class, keepflag // pbox[0], pbox[1], pbox[2], pbox[3], pbox[4], pbox[5], pbox[6] float left = pbox[0]; float top = pbox[1]; float right = pbox[2]; float bottom = pbox[3]; float confidence = pbox[4]; for(int ii=0;ii<32;ii++) { temp.push_back(pbox[ii+7]); } proposal.push_back(temp); result.id=pbox[5]; result.confidence=pbox[4]; cv::Rect rect(left, top, right-left, bottom-top); result.box=rect & holeImgRect;//; //x,y,w,h f_output.push_back(result); int label = static_cast<int>(pbox[5]); uint8_t b, g, r; tie(b, g, r) = iLogger::random_color(label); cv::rectangle(image, cv::Point(left, top), cv::Point(right, bottom), cv::Scalar(b, g, r), 3); auto name = cocolabels[label]; auto caption = iLogger::format("%s %.2f", name, confidence); int width = cv::getTextSize(caption, 0, 1, 1, nullptr).width + 10; cv::rectangle(image, cv::Point(left-3, top-33), cv::Point(left + width, top), cv::Scalar(b, g, r), -1); cv::putText(image, caption, cv::Point(left, top-5), 0, 1, cv::Scalar::all(0), 2, 16); }//对应于Python中的process_mask //vector2mat for (int i = 0; i < proposal.size(); ++i) {mask_proposals.push_back(Mat(proposal[i]).t());}/获取 proto 也就是output1的输出 //逻辑 GetMask Vec4d params; //根据实际图片输入 和 onnx模型输入输出 计算的,此处直接写死 params[0]=0.5; params[1]=0.5; params[2]=0.0; params[3]=2.0; Mat protos = mask_protos.reshape(0, {32,136 * 240}); Mat matmulRes = ( mask_proposals * protos).t(); //23,32 * 32,32640 ==> 23,32640 Mat masks = matmulRes.reshape(proposal.size(),{136,240}); //上一步骤作转置的原因://Mat Mat::reshape(int cn,int rows=0) const cn:表示通道数(channels),如果设置为0,则表示通道不变; vector<Mat> maskChannels; //分离通道split(masks, maskChannels); for (int index = 0; index < f_output.size(); ++index) { Mat dest,mask; //sigmoid cv::exp(-maskChannels[index],dest);//e^x dest= 1.0/(1.0 + dest); //_netWidth = 960; _netHeight=544; //ONNX图片输入宽度\高度 //const int _segWidth = 240;Rect roi(int(params[2] / netWidth * segWidth), int(params[3] / netHeigh * segHeight), int(segWidth - params[2] / 2), int(segHeight- 0/2)); //136-params[3]/2最后一个参数改了 mask会有偏移dest = dest(roi);resize(dest, mask, cv::Size(col,row), INTER_LINEAR);//srcImgShape (1920,1080)//INTER_NEAREST 最近临插值 PYTHON中用的就是 INTER_LINEAR - 双线性插值 //cropRect temp_rect = f_output[index].box;mask = mask(temp_rect) > mask_thresh; //mask_threshg mask阈值f_output[index].boxMask =mask; } //DrawPred 绘制结果 for (int i=0;i<f_output.size();i++) { int lf, tp,wd,hg; float confidence; lf=f_output[i].box.x; tp=f_output[i].box.y; wd=f_output[i].box.width; hg=f_output[i].box.height; confidence=f_output[i].confidence; int label = static_cast<int>(f_output[i].id); //生成随机颜色 uint8_t b, g, r; tie(b, g, r) = iLogger::random_color(label); cv::rectangle(mask_box, cv::Point(lf, tp), cv::Point(lf+wd, tp+hg), cv::Scalar(b, g, r), 3);//绘制box框 auto name = cocolabels[label]; auto caption = iLogger::format("%s %.2f", name, confidence); int width = cv::getTextSize(caption, 0, 1, 1, nullptr).width + 10; cv::rectangle(mask_box, cv::Point(lf-3, tp-33), cv::Point(lf + width, tp), cv::Scalar(b, g, r), -1);//绘制label的框 cv::putText(mask_box, caption, cv::Point(lf, tp-5), 0, 1, cv::Scalar::all(0), 2, 16);mask_seg(f_output[i].box).setTo(cv::Scalar(b, g, r), f_output[i].boxMask);//绘制mask } addWeighted(mask_box, 0.6, mask_seg, 0.4, 0, mask_box); //将mask加在原图上面 } 效果展示:

来源地址:https://blog.csdn.net/qq_40198848/article/details/127937648

--结束END--

本文标题: 【深度学习】YOLOv5实例分割 数据集制作、模型训练以及TensorRT部署

本文链接: https://www.lsjlt.com/news/402772.html(转载时请注明来源链接)

有问题或投稿请发送至: 邮箱/279061341@qq.com QQ/279061341

下载Word文档到电脑,方便收藏和打印~

2024-03-01

2024-03-01

2024-03-01

2024-02-29

2024-02-29

2024-02-29

2024-02-29

2024-02-29

2024-02-29

2024-02-29

回答

回答

回答

回答

回答

回答

回答

回答

回答

回答

0